All projects

LabelRadar

LabelRadar is a web platform streamlines the demo submission process across the music industry, helping artists get heard while also allowing labels and promoters to review new submissions in an efficient and addictive way.

Role: Since joining Beatport in 2023, I’ve been the sole designer working on LR’s initiatives alongside their Product Manager.

Settings 2.0

Complete Settings overhaul, improving information architecture and distributing all relevant settings in multiple focused tabs. Both for Artists, Labels and Contests.

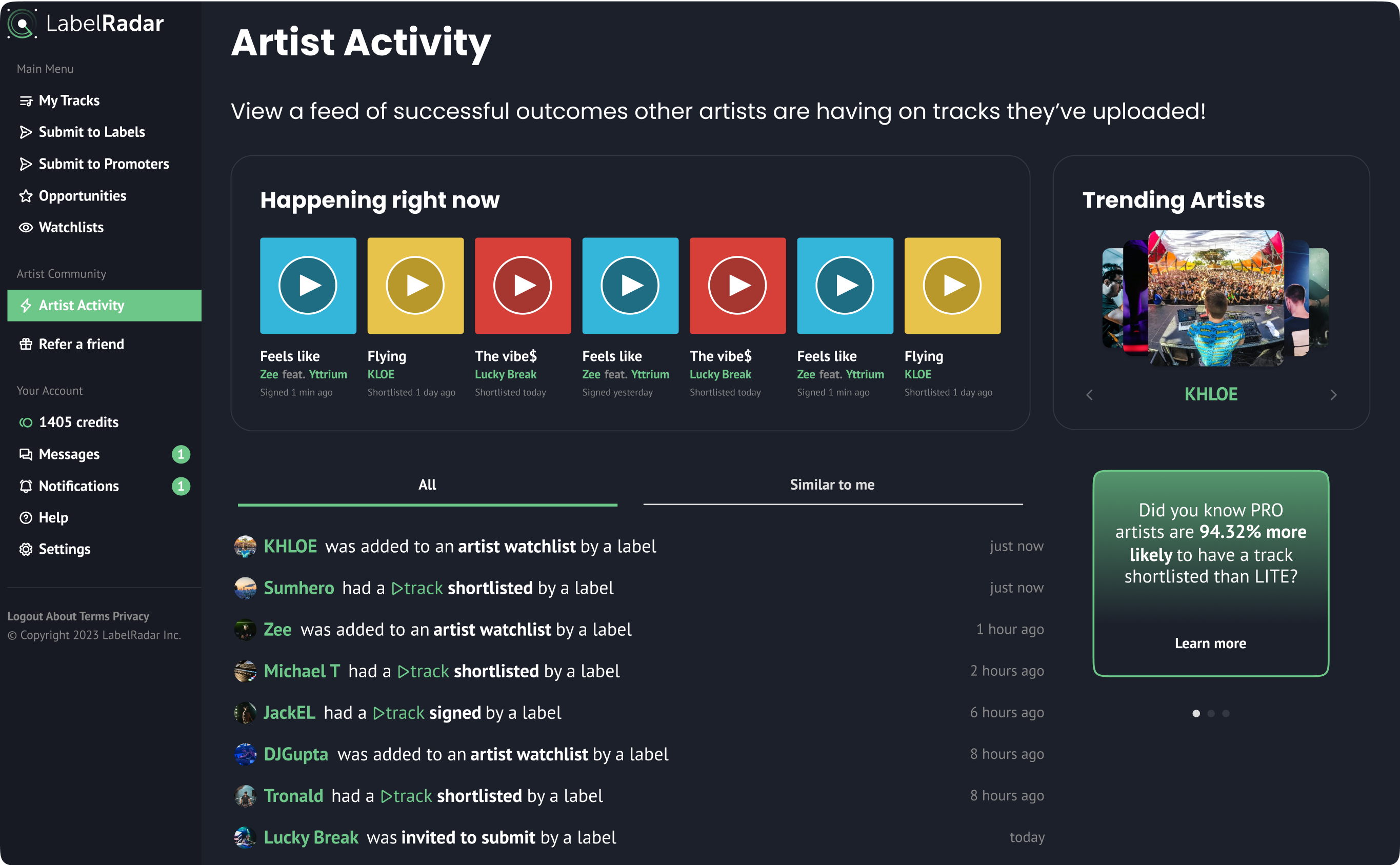

Artist Activity and Network

Creating a space where artists are able to connect, collaborate and share their successes or struggles within the app. A few months after implementation, we had a 34.4% connection request accepted rate / 2.7% decline / 63% pending.

Rating labels

Encouraging artist users to review their recent interactions with labels on the platform after 72 hours, fostering transparency and fairness within LabelRadar. This interaction takes place within the chat, allowing users to rate their experience across three key factors: Communication, Value, and Professionalism. Additionally, users have the option to leave a written message to provide further feedback.

Displaying reviews

Reviews are accessible on label profiles under the "Community Feedback" tab, where users can view both the reviews and the labels' responses. Additionally, users can mark others' reviews as helpful to highlight valuable feedback.

Contest portals

Enhancing Contest Portals by introducing an "Entries" tab, allowing artists and external users to explore contest submissions, listen to tracks, view descriptions, download track stems, and submit their own entries seamlessly.